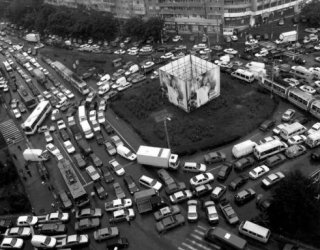

Traffic under control

The traffic I am talking about isn't the one webmasters like so much. It's traffic in general, the one called bandwidth in hosting-related discussions.

There is no such thing as unlimited bandwidth, so the ability to control traffic flow and react to its surges or any predefined amounts consumed is very important.

In almost every situation the quicker is response to traffic consumption, the better. SNMP, or simple network management protocol, provides means to handle certain traffic-related tasks. Those that can be handled by using IPHost Network Monitor. Let's be more specific.

Counters and triggers

Traffic counters are provided by a number of SNMP-supporting devices related to networking. Those include routers, network adapters etc.

One of the typical tasks is to notice traffic surge and take measures — i.e., shut down the line preventing too much traffic consumed, and notify the administration.

The task is both simple and complex/ Although SNMP-enabled devices have the so-called traffic counters (OIDs looking like .1.3.6.1.2.1.2.2.1.10.N, for inbound counters and .1.3.6.1.2.1.2.2.1.16.N for outgoing ones), they have one significant feature: these counters, in general case, can't be reset by means of any API/software tool. They can only grow and wrap around a predefined value (depending on whether they ar 32- or 64-bit long).

However, a simple script, taking these counters and calculating an average/totals can be implemented quite easily, so we can use the following means to set up a simple traffic control.

First, we create such a script and call it periodically from the IPHost network Monitor, to receive an integer value indicating traffic consumption. The script posts a numeric string to a standard output indicating traffic consumption.

Second, we create a custom alerting rule, using «Set SNMP value» alert; since the field controlling a network interface state are read-write, we can effectively block all the traffic through an interface by means of a single SNMP value change.

Now we can shut down an interface.To re-enable it, we can create another rule played when the traffic state becomes normal (i.e., the script mentioned returns an acceptable value), where we set the mentioned flag value to a state when traffic flow is enabled.

Caveats

There are three versions of SNMP supported. Roughly speaking, the greater is the version, the more means of access control it provides. Please make sure proper authentication is performed before SNMP commands (such as «set value») are performed. Using SNMP v1 is strongly discouraged; anyone able to modify SNMP values will have the full control over device's adapters and access to a bunch of information about device's settings..

Also, do not forget to test all the scripts on a «sandbox» device, the one you can manage in whatever manner you like without affecting real-life data and/or devices.

You shouldn't shut down the interface you are using to connect to the device. After that, no control is possible, until you manage to connect through an another interface and/or reset the device.

Please note also that the case above is the simplest and not really useful example on hwo to control traffic flow. In real life a number of users uses a network device and selective actions are expected when a user exhausts the traffic quota assigned to them.